One of the biggest challenges in cosmology and astrophysics is to understand the parameters that describe the content, geometry, and evolution of our Universe. The gravitational lens effect—produced by the deflection of light rays by a gravitational field—is one of the most powerful tools to address these problems. It allows the study of dark matter, dark energy, and other fundamental components of the Universe.

I’m presenting here some of the research topics of my interest, including the search for new gravitational lens systems using artificial intelligence.

👉 Publications

📄 Scientific Presentations

🔙 Back to Home

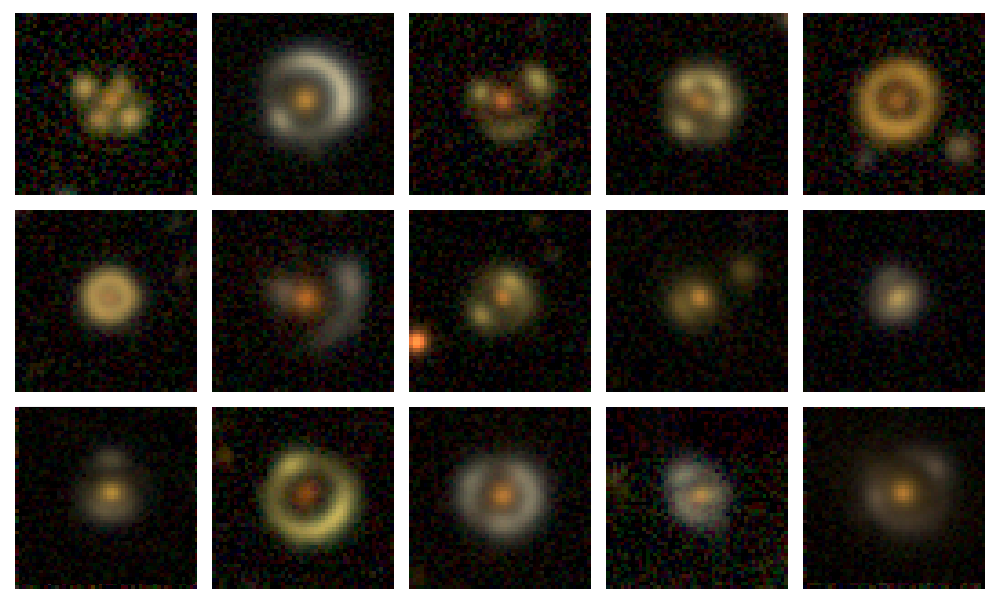

I am currently using Convolutional Neural Networks (CNNs) to identify gravitational lenses in ground-based imaging data. This machine learning approach requires large training, validation, and test datasets composed of thousands of images. However, with only a few hundred known strong lens systems available, synthetic data is necessary.

To address this, I developed a comprehensive simulation pipeline using Lenstronomy (Birrer & Amara 2018) to generate realistic simulations based on real observational data, unlike previous approaches relying solely on synthetic inputs.

The next steps include observational follow-up campaigns using powerful telescopes to confirm our CNN-selected candidates through high-resolution imaging and spectroscopy.

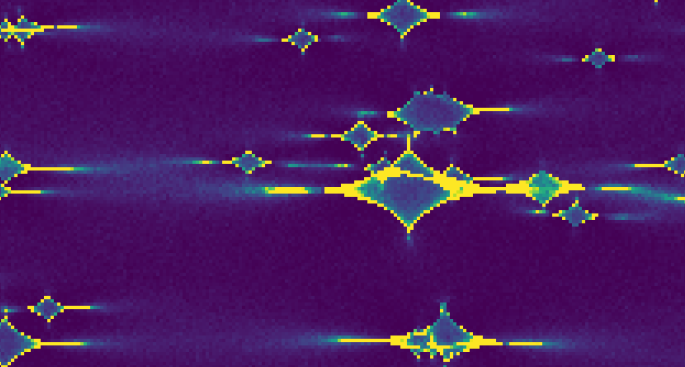

Quasar microlensing occurs when the light from a distant quasar is magnified by stars or compact objects in a foreground galaxy, producing micro-arcsecond scale variations. I have worked on two primary applications in this area:

The central regions of quasars, including their accretion disks, are too small to be directly imaged, even with interferometers like the VLTI. However, microlensing offers indirect access to this regime.

By analyzing spectroscopic data for chromatic microlensing signatures (wavelength-dependent magnification), I have been able to estimate the size and temperature profile of accretion disks, testing models such as those proposed by Shakura & Sunyaev (1973).

Strongly lensed quasars can provide an independent measurement of the Hubble constant (H0) through time-delay cosmography. Projects like COSMOGRAIL and H0LiCOW use long-term photometric monitoring to extract these delays.

However, microlensing-induced variations can bias these measurements. I studied and implemented methods proposed by Tie & Kochanek (2018) to simulate and correct for microlensing time delay, ensuring more robust estimates of H0. This methodology is now integrated into the H0LiCOW collaboration’s workflow.